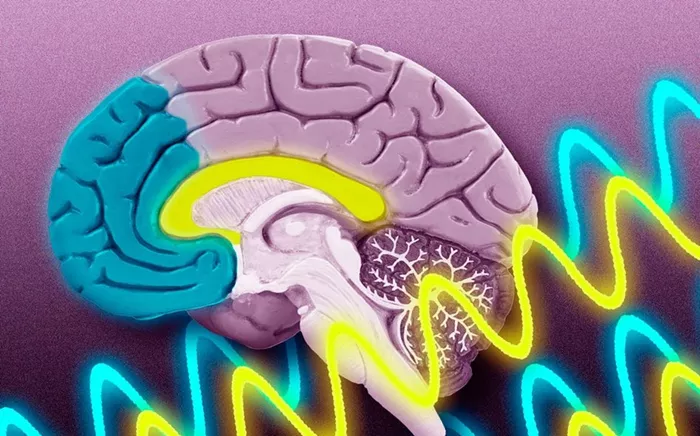

A recent study by Emmanuel Biau and colleagues at the University of Liverpool sheds light on how the human brain integrates auditory and visual information to form cohesive memories.

Published in The Journal of Neuroscience, the research explores the neural mechanisms behind recalling memories that combine what we see and hear—such as remembering a friend’s voice alongside their facial expressions.

The team showed participants movie clips featuring people speaking, manipulating the timing between speech sounds and lip movements.

When sounds and lip movements were synchronized, brain activity in two specific regions exhibited rhythmic oscillations during viewing. Remarkably, this oscillatory activity reappeared when participants later recalled the clips.

Conversely, when speech sounds lagged behind lip movements, these brain oscillations diminished both during viewing and memory recall.

Biau explains, “If auditory and visual speech inputs arrive simultaneously, their chances of being linked in memory increase because they align with the same phase of neural activity. This synchronization is disrupted when the inputs are asynchronous.”

The findings suggest that synchronized oscillatory brain activity plays a key role in binding auditory and visual cues into unified memories. While promising, the researchers note that further studies are needed to fully understand this integrative process.

YOU MAY ALSO LIKE:

- Higher McCance Brain Care Score Cuts Stroke Risk in Women, Study Finds

- High Neuroticism Linked to Insomnia, Anxiety Key Mediator

- Feeling down? Maybe you’re silently suffering from depression & anxiety